The Flight Physics Concept Inventory: Reliably Evaluating Aerodynamic Lift, Drag and Associated (Naïve) Concepts of Flight in Class and In-Game

Editor: Melissa Dancy

Published online:

Abstract

Students often struggle to transfer conceptual understanding from isolated instruction to a coherent mental model. This is especially true for the context of flight physics, fluid dynamics, or the concepts of aerodynamic lift, drag, stall, center of mass, and angle of attack. To elicit naïve concepts and expert models, we have developed a new, automatically scored multiple-choice test on introductory fluid dynamics. The Flight Physics Concept Inventory (FliP-CoIn) was developed for online or paper use as well as for pre- and/or post-evaluation. In its gamified form as a free-to-use Particify™ quiz, FliP-CoIn can also serve as a formative assessment and collaborative team-building event. Distractors are based on naïve student concepts, and we have optimized the instrument‘s validity and reliability. We present example data and questions for comparing student data and interpreting results. We describe a gamified lesson (like an ILD—Interactive Lecture Demonstration) addressing flight physics and fluid dynamics naïve concepts collaboratively with the help of our newly developed assessment instrument. We share flight physics and fluid dynamics resources including the FliP-CoIn instrument, supporting materials, a lesson plan, lesson resources, videos, and three Particify™ quizzes.

Primary Image: A coin in a fluid flow with visible turbulence.

Citation

Genz F, Bresges A, Falconer KA. 2023. The Flight Physics Concept Inventory: Reliably Evaluating Aerodynamic Lift, Drag and Associated (Naïve) Concepts of Flight in Class and In-Game. CourseSource 10. https://doi.org/10.24918/cs.2023.27Article Context

Course

Article Type

Course Level

Bloom's Cognitive Level

Vision and Change Core Competencies

Class Type

Class Size

Audience

Lesson Length

Pedagogical Approaches

Principles of How People Learn

Assessment Type

Introduction

Flight is one of humankind’s most fascinating endeavors; countless books, poems, inventions and love stories have been inspired by the desire to understand it. Despite this, most standard textbooks in school only superficially cover the topic and no standardized assessments related to aerodynamics exist (1). The result is oversimplified and erroneous explanations, which lead to naïve concepts (also called alternative, pre- or misconceptions) (2).

However, even brief, concise and correct explanations will most likely strengthen misconceptions in student minds, and less likely foster expert concepts—meaning students become more confident in their existing concepts (2, 3). This sounds paradoxical at first, but makes sense in learning psychology (4): students are not empty vessels to be filled, rather students enter a class with many childhood-developed mechanics concepts about the world, based on prior lived experience. When students only receive a good explanation, and this explanation is not contrasted against their prior knowledge, students are likely to cherry-pick what they hear, and often feel even more secure in their prior (naïve) conceptions (5). An example of this is the idea that “aerodynamic lift always points upwards.”

Therefore, contrasting naïve to expert concepts is key in conceptual change instruction (6). By conceptual change instruction we mean (i) making students’ prior knowledge explicit (e.g., noting down, discussing, voting); then (ii) providing student activities and questions to challenge these conceptions; and (iii) comparing prior student concepts to later ones.

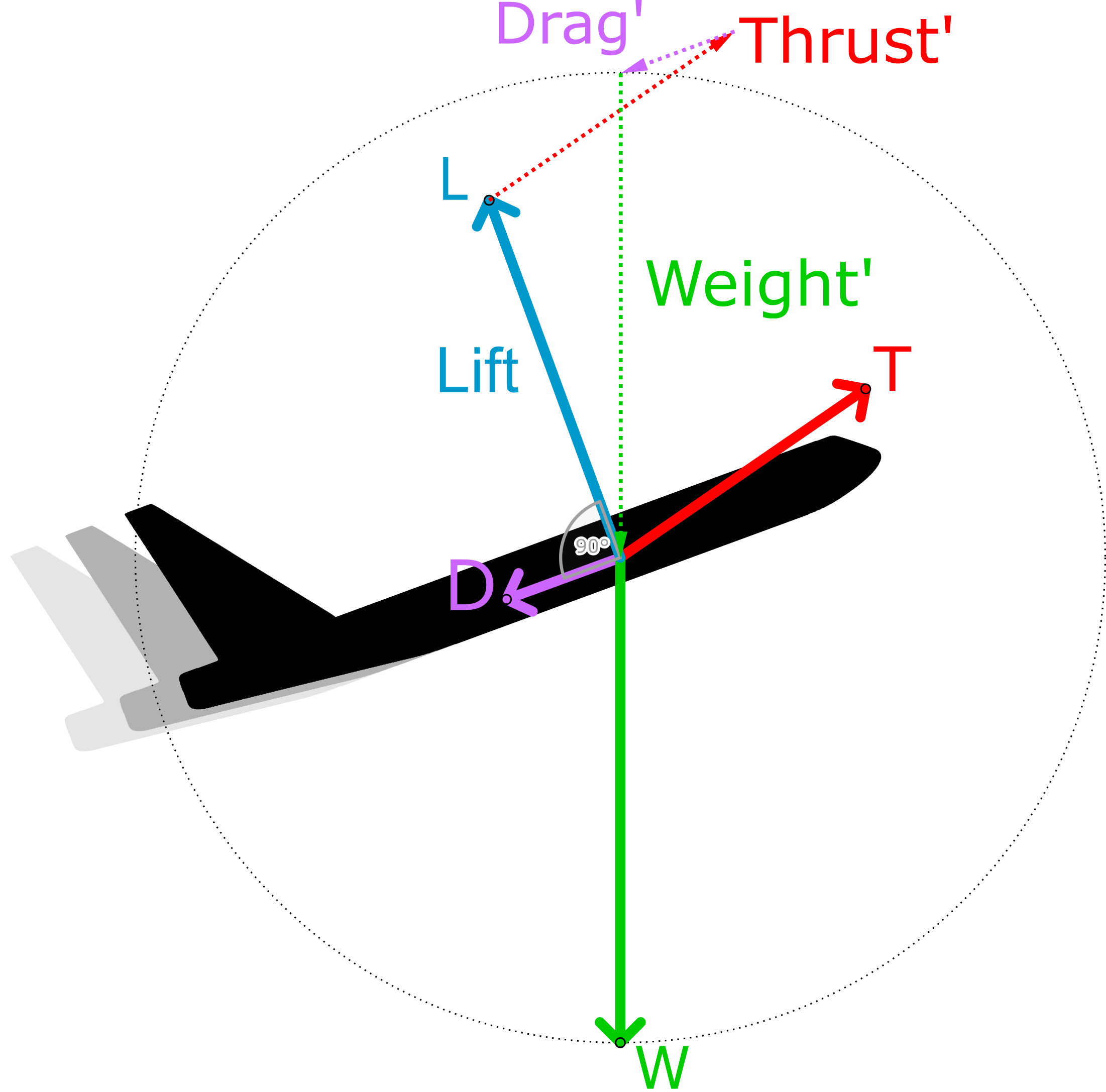

The second problem is that sometimes even “good” explanations are absent. Too often, aerodynamic lift is explained by the wrong notion that the Bernoulli principle is its primary cause, rather than a result of the deflected air (7). This naïve rationale often traps college students with a physics, engineering or aviation major into the false reasoning that a path length difference of air over and under the wing is how lift is generated (e.g., “Because the air’s path length over the wing is longer, the air flowing on the topside of the airfoil must be faster; this creates a lower pressure on the topside of the wing”). It even leads to the wrong notion that the airfoil profile shape itself is generating most lift, and angle of attack is less important than shape. Even Albert Einstein briefly believed that an airfoil’s shape is the primary lift factor and published a paper about a cat’s arched back wing (8)—which almost killed a test pilot. Furthermore, when taught in the context of forces and free body diagrams, illustrations are often deceptive or inaccurate, showing only horizontal flight, and lift pointing exclusively upward, when in fact aerodynamic lift is more complex (i.e., it is perpendicular to the direction of flight).

Finally, flight physics is a neglected interdisciplinary topic, missing from the standard instructional canon. We like to think of flight physics as a crosscutting topic, encompassing several rich areas. It can cover a huge range of contexts and applications (e.g., bird flight, drag optimized shapes in nature, or bow bulges at ships and wind turbines). In medicine and politics, accurate models for fluid flow are more important than ever, modeling virus movement and risk management. Climate change models for ocean and atmosphere movement are paramount in times of global warming. For a comprehensive collection of fluid dynamics lessons and resources, see the open NASA and AAPT publication “Aeronautics for Introductory Physics” (9).

Flight Physics in US and German Curriculum

Beyond the obvious use for flight schools and engineering institutions, the activities we describe also cover and deepen many aspects of current science curricula in college and high school. The US Next Generation Science Standards (NGSS) entail the crosscutting concepts of “cause and effect” and “scaling,” which come up centrally when studying flight physics and aerodynamics. In addition, the NGSS category for “motion and stability” contains the sub section of “forces and interactions.” Flight physics is highly motivational, and provides an opportunity to deepen and apply earlier basic knowledge from other contexts. Notably, the largest physics society in the world, the German Physical Society (DPG), has started listing “fluid dynamics” as a core concept for K–12 (10, p. 28).

Source of Inspiration and Perquisites

Our interactive lesson was inspired by a frequently viewed and downloaded CourseSource lesson in biology (11).

For our activity, no prerequisite student knowledge or skills are required beyond vector representations. However, conflicting prior knowledge is presumed on the student side (2–5). On the teacher side, we assume some basic familiarity with flight physics and conceptual learning (12–25).

Gamification: Transforming an Instrument Into an Interactive Lecture Quiz

Why We Gamify

Active student engagement improves learning outcomes (26, 27). Moreover, educational games enhance problem solving, communication and cooperation skills (28). We advocate an activity akin to Sokoloff’s Interactive Lecture Demonstrations (29), one that we call an Interactive Lecture Quiz. Despite this, students often claim to learn more through traditional lecturing, but studies show that this self-perception is incorrect (30, 31). Active learning feels more difficult for students at first, and they might even complain that teachers are not doing their jobs right. The gamification of lectures, enriched with highly social active learning elements, is our solution to this student perception bias. It allows for students to receive the benefits of student engagement while avoiding the perception amongst students that the work is harder. As such, we give implementation tips on how to gamify lectures and assess them with the FliP-CoIn instrument in the following section.

Lesson Structure and Spirit

In former publications we described a gamified lab lesson using flight simulators, 2D wind tunnel apps (32) and low-cost experimental setups (33). Here, we will focus on an approach for large lectures, which promotes active learning. The core idea is an online quiz calling for predictions of student teams in constructive competition. The questions are projected to the lecture wall or to student smartphones. Student teams can answer in any browser. Team scores are presented instantly after each question. In this manner, student teams will know whether they have an expert understanding or need to pay more attention to this particular part during the lecture. We even recommend splitting up the lecture and interweaving the questions to create many phase changes and isles of active learning (34).

The structure of this lesson was designed to be repetitive and modular (see Table 1). This allows students to concentrate on the content. Usually the first quiz round takes a bit longer and the following round a bit less than 15min. When time is short, one can simply skip Phase 6 or the last questions. In Phase 4, there is no need to mention the correct answer; research suggests it is even counterproductive to do so (5, 30). It is only important to (let) point out why and where all the distractor options fail. This constructive confusion might not lead to perfect expert understanding instantly, but students are likely to improve on their mental models, and, more importantly, own them. Students are likely to continue refining their model when new scenarios challenging these concept are presented. For the complete lesson plan, see Supporting File S2.

Table 1. Spiraling procedure. The lesson core repeats with every quiz round. Phase 6 is optional.

| Spiraling Procedure (75 min for 5 quiz rounds) | |

|---|---|

|

Phase 1 3 min |

Finding a Team Answer (e.g., slide 7) “Come up with a team answer. Why do you think so?” |

|

Phase 2 3 min |

Reflection 1 (e.g., slide 8) “Discuss with your team: ‘Which believes could one hold that answers the other options?’” |

|

Phase 3 2 min |

Rationales (e.g., slide 9) “Discuss with other teams or all: ‘What is the rationale for your team choice?’” |

|

Phase 4 5 min |

Results (e.g., slide 10) Show results and talk about why distractors are wrong. |

|

Phase 5 1 min |

Team Scores Show team scores briefly between questions. |

|

Phase 6 3 min |

Reflection 2 “How was the decision made? Focus on body language.” |

Implementation Tips for a Gamified Lecture

Below, we summarize the lecture’s essentials:

-

Emphasize that the focus of the quiz is not on how many answers they get correct, but on gaining clarity about the reasons and explanations for why they chose an answer.

-

Build heterogeneous or randomized groups of 3–4 students. Let groups find a team name (for group identification).

-

For best learning effects, we present team scores after each question and give time to let students talk to other groups before moving on.

-

After each question (block), we explicitly name the underlying alternative concepts and give vivid examples where they fail. This is THE crucial step for preparing for the next step.

-

When enough cognitive dissonance (27) has prepared the learners’ minds, we explain the expert concept or let student groups present them. Encourage visual examples, metaphors and/or storytelling, while referring back to the differences between naïve and expert explanations (3, 5).

-

Sort and/or select the questions by concept domain. This way, your students can better track their learning process and identify their learning gains.

Finally, plan enough time for the active learning elements—especially when students are not familiar with active learning in lectures (35). In Supporting File S1, you will find at least three ready-to-use quizzes assorted for the concept domains of lift, drag and angle of attack (with currently 6, 19 and 5 questions, respectively). Moreover, Supporting File S1 contains presentations and video clips for a gamified lesson including explanations for instructors after some tough questions.

How FliP-CoIn Assesses Student Concepts

According to cognitive dissonance theory, eliciting prior student concepts early is crucial for preparing learning (4). Hence, the FliP-CoIn instrument (see Supporting File S3) was developed as a multiple-choice single-response instrument with automated scoring. In the online version, students can receive direct feedback, and teachers get instant access to class comparisons via the PhysPort.org data explorer. For the evaluation of the paper-pencil version, a spreadsheet for scoring with a coding mask streamlines the visualization of results. For more details of how one can evaluate student concepts with FliP-CoIn, please consult Supporting File S4.

Common Naïve Concepts of Flight Physics

Despite our previous knowledge about the prevalence of common misconceptions, we were stunned by the abundance of naïve concepts collected with the FliP-CoIn instrument. Hence, we assembled a “Top 10” list with the most common naïve concepts. To prevent repeating these naïve notions, and for creating cognitive dissonance in students’ minds, we inverted their meaning (see Table 2). These Top 10 statements are designed to challenge most students’ naïve prior concepts. As noted above, creating cognitive dissonance first, is crucial for overcoming naïve these concepts (2). We use the term naïve concepts in the sense of non-expert concepts or mental models, which are lacking some details to fully grasp the given physics phenomenon. In front of students, we prefer the term alternative concept, since even non-expert concepts are a valuable step on the way to a more scientific understanding.

Table 2. Challenging statements tackling the most common naïve student concepts.

| Concept (sub) domain(s) | Challenging statements for promoting discussion engaging most common naive concepts |

|---|---|

| Lift direction | Dynamic lift does not (necessarily) point upwards. |

| Pathlength and lift | Pathlength difference does not explain lift. |

| Continuity of airflow | Air over and under the wing does not rejoin at the tailing edge. |

| AoA and lift | Angle of Attack (AoA) can decrease while lift increases. |

| Generating lift | Shape (curvature) of the wing is not more important than AoA. |

| Streamlines | Displaced air does not reach its original height again. |

| Avoiding drag | A pointy frontside is not (necessarily) the most aerodynamic solution. |

| Avoiding drag | Shorter objects (with less surface) area may experience more drag. |

| CoM position | Center of Mass is not near the luggage cabin. |

| Flight experience | Speed does not exert the most significant influence on passenger forces. |

To address our Top 10 challenging statements requires active student engagement, modeled here via a gamified lecture with group work activity we described in the Gamification section.

Common Naïve Concepts of Aerodynamic Drag

Question 5 of the FliP-CoIn instrument asks students which one of two bodies experiences less aerodynamic drag in 13 pairwise comparisons. All bodies have the same maximum cross-sectional area facing the wind (ø=2cm, see Table 3). According to our data, the most common naïve concept concerning aerodynamic drag is the naïve notion that “a pointy nose is best.” More than 50% of our students rank B higher than A. Common student rationales are (i) “race cars are pointy, too,” (ii) “one has to split the air first,” (iii) “it looks closest to a bullet to me,” or (iv) “the backside is not so important.” Experts know that a pointy backside/tail is more important than a pointy frontside for avoiding turbulence and usually reason more like: “The high pressure area in front of the body will function as an air cushion, making the body’s frontside relatively slippery anyways.” Of course, a pointy backside in combination with a pointy frontside is even more slippery, but this is consciously not an option in the assessment.

Table 3. Drag bodies sorted from low to high aerodynamic drag (top to bottom).

| Drag Bodies | ||

|---|---|---|

| A) | drop1 (round side facing wind) |

|

| B) | drop2 (pointy side facing wind) |

|

| C) | filled half sphere (round side facing wind) |

|

| D) | sphere |  |

| E) | filled half sphere (flat side facing wind) |

|

| F) | coin / flat cylinder (flat side facing wind) |

|

By far the most common misconception is indicated by the fact that usually about three quarters of students pick body D over C in direct comparison. This is consistent with the concept of “avoid sharp edges,” “a tail is good,” and “symmetry makes slippiness.” However, it contradicts actual measurements. Experts argue that the surface drag is smaller at C, and sometimes also that stable vortices in the wind shadow are forming a somewhat pointy tail behind drag body C.

Surprisingly, some students see the sphere as the “optimal shape” concerning drag, and prefer the sphere over all other drag bodies (14% of students). This might be due to memorized sentences from mathematics or Greek history like “a sphere is the perfect shape,” which are overgeneralized and bleeding into the physics domain. Further, since some students (13%) hold the naïve concept “a flat end” or “the backside does not matter,” it is particularly hard for these students to decide between C and D, or between E and F.

Common Naïve Concepts of Aerodynamic Lift

Lift is defined as perpendicular to the direction of flight. Even though most students seem to know this, they often do not correctly apply this definition in flight states deviating from steady horizontal flight. “Lift always points up” or “the resulting force is lift” are common naïve statements uttered by students. Questions 15–19 of our FliP-CoIn instrument challenge these beliefs when asking about aerodynamic lift while airplanes are in descent, in a looping, banking in a curve, and in climb flight.

The most prevalent naïve concept probed with the FliP-CoIn instrument is: “Lift is bigger than weight when the airplane goes up.” While for high-scoring experts it is clear that this can never be true for unaccelerated flight (see also Figure 1), it is not clear for 91% of our students. Often the thrust force is overlooked as a contributor to balance the weight in steady climb flight. Also, lift seems to be confused with the resulting force of lift plus thrust, or is confused with a vector pointing in the direction of movement. Derek Muller found similar effects for a scenario where a ball is tossed through the air (2, 5).

Conclusions

We showed that eliciting and changing concepts is important but difficult—especially in flight physics. Therefore, we have sketched a gamified lesson facilitating active and inclusive learning in teams. We elaborated on key phases of the lesson and its structure, and gave implementation tips for the gamified lecture. Based on our data and teaching experience, we assembled a Top 10 list for challenging naïve concepts in flight physics and elaborated on the most common student concepts concerning aerodynamic lift and drag. Beyond this, we developed ready-to-use supporting materials (slides, quizzes and video clips) on how the questions of the newly developed Flight Physics Concept Inventory’s (FliP-CoIn) can be used to assess your students’ initial ideas and to enhance student learning through gamification. We have optimized the FliP-CoIn instrument for evaluation time via automatic scoring, its face validity through expert and think-aloud interviews, and its internal reliability though an item analysis. As an activity at the beginning of a lecture series, the tool informs teachers about the pre-conceptions that many students have. When also applied after a learning intervention, the FliP-CoIn instrument will highlight learning gains and answer questions such as, “Where was my teaching most effective?” or “Which method outperformed another one?” This data might become useful, not only when inspiring your own teaching, but also for colleagues or funding personnel in need for reliable, research-based assessment data for the effectiveness of your teaching approach.

Last but not least, we are eager to get feedback from you. For updates on FliP-CoIn have a look on flip-coin.uni-koeln.de or write us for yet unpublished developments.

Scientific Teaching Themes

Active Learning

In the gamified lecture, team collaboration enhances the learning experience. This activity engages the teams in problem solving. The time pressure and instant feedback create both an emotional experience and cognitive dissonance. Previous studies have shown emotional experiences influence learning positively (36). To further enhance the learning objectives, students should be asked to reflect on their discussion and how they think they settled their answer (consensus finding strategies). This should be done without time pressure.

Assessment

The Flight Physics Concept Inventory was designed for quick feedback on individual students and for whole courses. Teachers can use the instrument to gain insight into preexisting concepts when given before a learning intervention or class. When given before and after a class, it informs students and teachers about learning gains and the effectiveness of learning strategies. The Flight Physics Concept Inventory (FliP-CoIn) is freely accessible for teachers via PhysPort.org. The additional questions can also be used in the gamified lecture sketched here. Student teams will immediately know if they have answered a question correctly when the interim team scores are displayed. The fast-paced game is ideal for self-evaluation.

Inclusive Teaching

In the gamified lecture teams can compete against each another, which encourages collaboration among team members. The activity should help to build a supportive community of learning. Using randomized or heterogeneous groups also exposes students to new perspectives. The aerodynamic bodies for questions 4 and 5 can be 3D printed, allowing for multisensory accesses. In addition, all picture-based questions can be 3D printed as a relief, allowing blind students having more equal chances of understanding the question. The 3D meshes are available via the corresponding author.

Supporting Materials

-

S1. FliP-CoIn – Videos, slides & quiz templates for a gamified lecture

-

S2. FliP-CoIn – Lesson plan example

-

S3. FliP-CoIn – Instrument questions [ENG]

-

S4. FliP-CoIn – How to assess student concepts with the instrument

Acknowledgments

We would like to thank Scott V. Franklin, Daniel MacIsaac, Eleanor C. Sayre, Cyrill Slezak, Scott McComb, Benjamin Archibeque, Meliha Avcı, Martina Kramar, John-Luke Ingleson, Andreas Schadschneider, Irina Lemm, Aikaterini Mari, our families and the PEER institute for valuable feedback, support, care work and guidance. This work became significantly better because of you!

References

- Madsen A, McKagan SB, Sayre EC. 2017. Resource letter Rbai-1: Research-based assessment instruments in physics and astronomy. Am J Phys 85:245–264. doi:10.1119/1.4977416.

- Muller DA, Bewes J, Sharma MD, Reimann P. 2008. Saying the wrong thing: Improving learning with multimedia by including misconceptions. J Comput Assist Learn 24:144–155. doi:10.1111/j.1365-2729.2007.00248.x.

- Muller D. 2012. The key to effective educational science videos. https://tedxsydney.com/talk/derek-muller-the-key-to-effective-educational-science-videos/ (accessed 19 March 2023).

- Harmon-Jones E, Mills J. 2019. An introduction to cognitive dissonance theory and an overview of current perspectives on the theory, p 3–24. In Harmon-Jones E (ed), Cognitive dissonance: Reexamining a pivotal theory in psychology, 2nd ed. American Psychological Association, Washington, DC. doi:10.1037/0000135-001.

- Muller DA. 2008. PhD thesis. Designing effective multimedia for physics education. University of Sydney, Sydney, Australia.

- Smith III JP, diSessa AA, Roschelle J. 1994. Misconceptions reconceived: A constructivist analysis of knowledge in transition. J Learn Sci 3:115–163. doi:10.1207/s15327809jls0302_1.

- Weltner K. 1990. Bernoulli’s law and aerodynamic lifting force. Phys Teach 28:84–86. doi:10.1119/1.2342945.

- Einstein A. 1916. Elementare Theorie der Wasserwellen und des Fluges. Naturwissenschaften 4:509–510. doi:10.1007/BF01491471.

- NASA Aeronautics, American Association of Physics Teachers. 2015. With you when you fly: Aeronautics for introductory physics. AIP Publishing. doi:10.1063/9780735421547.

- DPG. 2016. Physik in der Schule. https://www.dpg-physik.de/veroeffentlichungen/publikationen/studien-der-dpg/studie-physik-in-der-schule (accessed 19 March 2023).

- Hoefnagels M, Taylor MS. 2016. “Boost your evolution IQ”: An evolution misconceptions game. CourseSource 3. doi:10.24918/cs.2016.12.

- Weltner K. 1987. A comparison of explanations of the aerodynamic lifting force. Am J Phys 55:50–54. doi:10.1119/1.14960.

- McLean D. 2018. Aerodynamic lift, part 1: The science. Phys Teach 56:516–520. doi:10.1119/1.5064558.

- McLean D. 2018. Aerodynamic lift, part 2: A comprehensive physical explanation. Phys Teach 56:521–524. doi:10.1119/1.5064559.

- Suarez A, Kahan S, Zavala G, Marti AC. 2017. Students’ conceptual difficulties in hydrodynamics. Phys Rev Phys Educ Res 13:020132. doi:10.1103/PhysRevPhysEducRes.13.020132.

- Waltham C. 1998. Flight without Bernoulli. Phys Teach 36:457–462. doi:10.1119/1.879927.

- Højgaard Jensen J. 2013. Introducing fluid dynamics using dimensional analysis. Am J Phys 81:688–694. doi:10.1119/1.4813064.

- Wodzinski R, Ziegler A. 2000. Schulpraxis-Erklärung des Fliegens in der Schule. Der mathematische und naturwissenschaftliche Unterricht 53:273–280.

- Send W. 2001. Aerodynamik: Physik des Fliegens: Ein reizvolles Thema, jungen Leuten die Gesetze der Mechanik lebendig zu vermitteln. Phys Bl 57:51–58. doi:10.1002/phbl.20010570613.

- Federal Aviation Administration. 2016. Pilot’s handbook of aeronautical knowledge FAA-H-8083-25B. United States Department of Transportation, Federal Aviation Administration, Oklahoma City, OK.

- Torenbeek E, Wittenberg H. 2009. Flight physics: Essentials of aeronautical disciplines and technology, with historical notes. Springer-Verlag GmbH, Heidelberg, Germany.

- McLean D. 2012. Understanding aerodynamics: Arguing from the real physics. John Wiley & Sons, West Sussex, United Kingdom. doi:10.1002/9781118454190.

- Weltner K. 2002. Flugphysik – um das Fliegen zu verstehen. Der mathematische und naturwissenschaftliche Unterricht. 55/7:388–396.

- Waltham C. 1999. The flight of a balsa glider. Am J Phys 67:620–623. doi:10.1119/1.19334.

- Anderson DF, Eberhardt S. 2010. Understanding flight, 2nd ed. McGraw-Hill, New York, NY.

- Pawl A. 2020. Using Force Concept Inventory data to develop impactful class activities. Phys Teach 58:94–96. doi:10.1119/1.5144787.

- Choi Y, Jakob S, Anderson WJ. 2017. Active learning: Developing self-directed learners through strong intellectual engagement. CourseSource 4. doi:10.24918/cs.2017.20.

- Subhash S, Cudney EA. 2018. Gamified learning in higher education: A systematic review of the literature. Comput Human Behav 87:192–206. doi:10.1016/j.chb.2018.05.028.

- Sokoloff DR, Thornton RK. 1997. Using interactive lecture demonstrations to create an active learning environment. AIP Conference Proceedings 399:1061–1074. doi:10.1063/1.53109.

- Deslauriers L, McCarty LS, Miller K, Callaghan K, Kestin G. 2019. Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc Natl Acad Sci 116:19251–19257. doi:10.1073/pnas.1821936116.

- Hake RR. 1998. Interactive-engagement versus traditional methods: A six-thousand-student survey of mechanics test data for introductory physics courses. Am J Phys 66:64–74. doi:10.1119/1.18809.

- Genz F, Vieyra RE. 2015. Evaluating the use of flight simulators for the NASA/AAPT “Aeronautics for introductory physics” educator guide, p 139–149. In Thoms L-J, Girwidz R (ed), Selected Papers from the 20th International Conference on Multimedia in Physics Teaching and Learning. European Physical Society, Mulhouse, France. doi:10.5282/ubm/epub.28963.

- Genz F. 2015. Inquiry lesson/lab: Bernoulli effect lab, p 65–72. In Vieyra RE, Springer T, Gipson L, Werries M, Schultz J (ed), With you when you fly: Aeronautics for introductory physics. AIP Publishing. doi:10.1063/9780735421547_004.

- Mislevy RJ. 1986. Recent developments in the factor analysis of categorical variables. J Educ Stat 11:3–31. doi:10.3102/10769986011001003.

- Andrews TM, Leonard MJ, Colgrove CA, Kalinowski ST. 2011. Active learning not associated with student learning in a random sample of college biology courses. CBE Life Sci Educ 10:394–405. doi:10.1187/cbe.11-07-0061.

- Guo J, Zou T, Peng D. 2018. Dynamic influence of emotional states on novel word learning. Front Psychol 9. doi:10.3389/fpsyg.2018.00537.

Article Files

Login to access supporting documents

Genz-Bresges-Falconer-The Flight Physics Concept Inventory Reliably Evaluating Aerodynamic Lift Drag and Associated Nave Concepts of Flight in Class and In-Game.pdf(PDF | 324 KB)

S1. FliP-CoIn - Videos slides & quiz templates for a gamified lecture.docx(DOCX | 16 KB)

S2. FliP-CoIn - Lesson plan example.docx(DOCX | 25 KB)

S3. FliP-CoIn - Instrument questions ENG.pdf (Instructors only)(PDF | 6 MB)

S4. FliP-CoIn - How to assess student concepts with the instrument.docx (Instructors only)(DOCX | 3 MB)

- License terms

Comments

Comments

There are no comments on this resource.