STEM Writing Project

Bins Scoring

Bins-Based Scoring

Bins-Based Scoring

The Challenge

One of the perennial complaints students have about their scientific writing training is they are not graded fairly or consistently. This is exacerbated in programs where most undergraduate lab reports are graded by GTAs, not faculty.

A priority when we train GTAs is ensuring they know how to grade students fairly, and that we have good inter-grader reliability (different GTAs give similar scores for work of similar quality.) We also want GTAs to know how to give actionable feedback that helps their students grow as writers. Finally, we want GTAs to spend their grading time efficiently.

To accomplish those goals, our GTA training program includes a general writing orientation and practice sessions, and round-robin grading where new and experienced GTAs grade the same pre-selected set of reports, then discuss any discrepancies in scoring.

Rather than write out their own recommendations, we train GTAs to refer students back to specific sections of our Resource Guide instead. This reinforces to undergraduates that they have the resources they need to be successful writers. Their instructors are not arbitrary gatekeepers of what the students should or should not do. It also reduces GTA workload.

Finally, we use bins scoring, a grading strategy based on Linda Nilson's specifications grading model. Briefly, we limit the number of items used for scoring reports, and only score those items on a binary scale (present/absent, yes/no, etc.)

Our Approach

Bins Grading Criteria

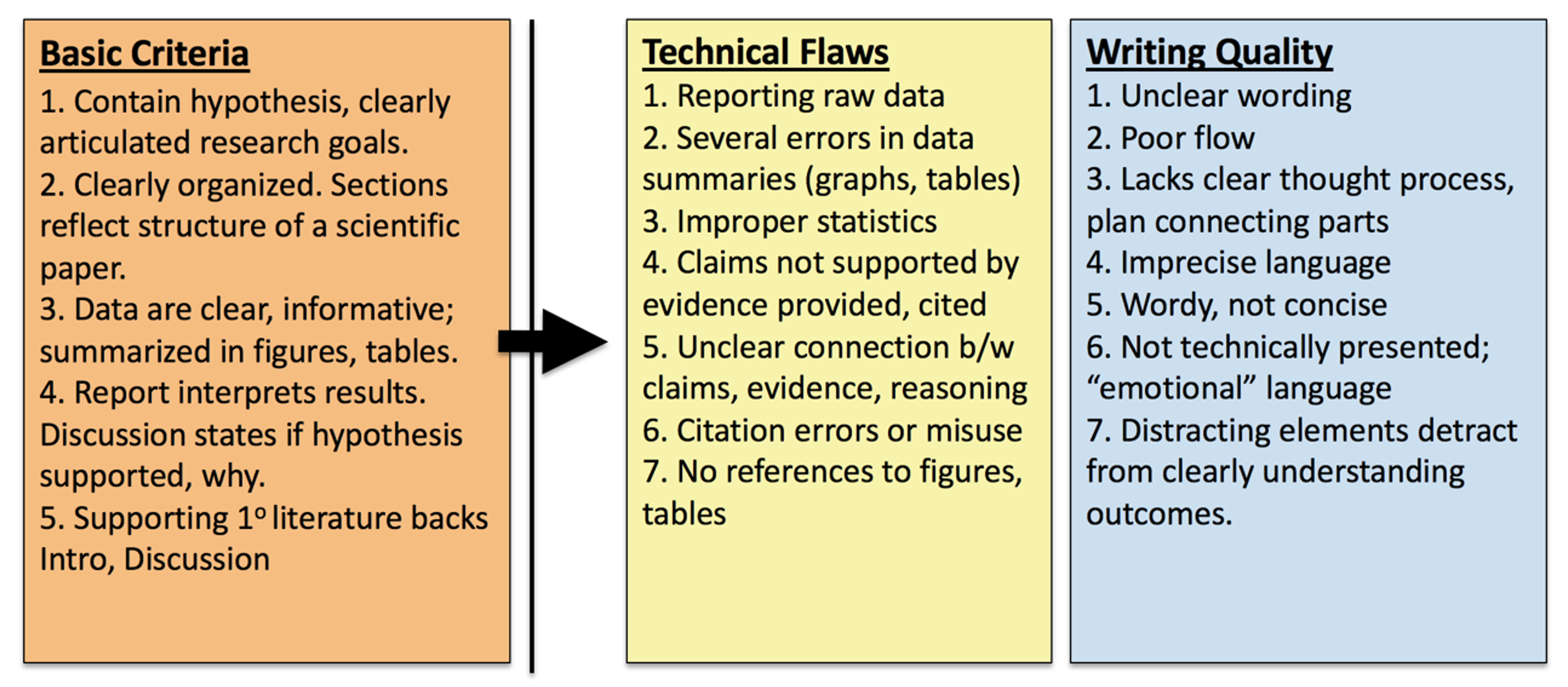

We do not assign numeric grades based on points, but instead describe the overall quality of a students work relative to our goals. These are the terms we use, and the criteria we use to assign scores to lab reports in our 100-level lab courses. They are divided into 3 categories: basic criteria, technical flaws, and writing quality flaws.

The five Basic Criteria reflect the fundamental structural errors that we have historically seen most often. These are strict minimum requirements; if a student’s report does not meet them it is marked "Unacceptable," and returned with minimal feedback. While this seems overly harsh, in practice it is not.

- Prior to implementation, up to 36% of all reports submitted lacked one or more of the basic requirements for a report to be considered complete.

- One year after implementing the 5 basic criteria requirement, the number of incomplete reports submitted for grading declined to 5.5% of all draft reports, and <2% for final versions.

- GTAs reported spending less time trying to comment on incomplete reports, and could focus on more meaningful revisions.

- End-of-semester averages for student report scores rose 4-12%.

Items listed under Technical Flaws or Writing Quality Flaws also are our most frequently encountered errors; other flaws are outlined in our Resource Guide. Again, technical and writing quality flaws are marked on a binary "yes/no" scale. Reports that meet basic criteria are scored as having:

- Both technical and writing flaws,

- Either technical or writing flaws, or

- Minimal or no basic technical or writing flaws.

Our philosophy is that writers can always improve, and we try to instill that idea in students. Reports that earn the highest bin score have met all requirements for introductory writing, but can still have minor errors. These can be addressed in other courses; our priority is establishing foundational skills and features of scientific writing.

Submission/Feedback/Revision Cycle

Bins grading is more effective when students have the opportunity to make changes based on the feedback. We routinely require students submit lab reports twice. Students upload an initial submission version, and GTAs have 7 days to score the reports, provide feedback comments, and return the submission versions to students. The students then have another 7 days to make changes and post a revised report for final grading. Only the score on the revised version is recorded.

Initially we found that students misunderstood our expectations, and (despite repeated reminders) would submit incomplete drafts in the first round. This limited GTAs' ability to provide useful feedback. We addressed this three ways:

- Automated pre-checks. Students must upload both their initial submission and final revised versions of reports to SAWHET (our automated report collection system.) SAWHET is a web form that provides immediate feedback on whether all components are present, and checks for basic mechanical errors. If a report is not cleared by SAWHET students can revise and submit again until the submission deadline; only the most recent submissions are forwarded to GTAs.

- Minimum content requirements. Student reports had to contain all of the sections of a complete lab report. Incomplete reports earned an automatic score of "0". To help students meet this, the SAWHET web form flagged and rejected any report missing essential sections.

- Minimum submission scores. Students' initial submissions had to be complete and meet all 5 basic criteria to earn a score of "2." Those that did could earn a score of 2,3, or 4 on revised reports. Students that submitted a complete report but did not meet all 5 basic criteria earned a "1" (unacceptable) and could only earn a 1, 2, or 3 on revised reports. Students that failed to submit a complete report (score of "0") could not earn higher than "2" on their revised report.

How Bin Scores Translate to Grades

These are the terms we use, and how they translate to assignment grades for computing course grades.

| Descriptive Term | Criteria | Translated Grade |

| Acceptable | Meets all basic criteria, and no significant writing or technical flaws | A/95% |

| Needs minor revisions | Meets all basic criteria, has EITHER writing OR technical flaws | B/85% |

| Needs major revisions | Meets all basic criteria, has BOTH writing AND technical flaws | C/75% |

| Submitted but unacceptable | One or more basic criteria are missing | F/55% |

| No report | Not submitted, or plagiarized | F/0% |

Lessons Learned

In parallel grading comparisons, we found that end-of-semester lab grades were consistently higher under the bins-scored protocol. Moreover, differences in mean and median lab course grades between GTAs teaching in the same course declined from 4-9% in courses that did not use bins scoring, to 1-3% differences in grades between GTAs when using bins scoring.

Bins grading also reduced the number of negative comments about grading on student evaluations from 47% of all comments to 18% in one year.

Available Project Resources

| Resources | Links |

| Bins Scoring Guide for New GTAs | DOCX file |

| 2019 AACU/PKAL Transforming STEM Higher Education Conference Workshop | |

| Workshop presentation | PPTX file |

| Workshop handout | DOCX file |

| Workshop practice reports | DOCX file |

| GTA and new instructor training activities | Local web page |

| 2020 ViABLE meeting workshop on using bins grading | Link to publication |

Looking Ahead

We are evaluating whether to revise our bins grading scale to separate out flaws in logic, argument, and evidence. Adding a third category above the basic criteria would address some challenges around assigning grades, but make the grading process more complicated. Check the list of To Do items for the Bins Scoring project to learn more about this and other related activities.

Let us know if you want to contribute to one or more associated projects, or have other resources you would like to contribute.

Where to Learn More

-

Hall, M. 2018. What is Specifications Grading and Why Should You Consider Using It? The Innovative Instructor Blog. https://ii.library.jhu.edu/2018/04/11/what-is-specifications-grading-and-why-should-you-consider-using-it/ (accessed 11/4/2020)

-

Nilson, LB. 2014. Specifications Grading: Restoring Rigor, Motivating Students and Saving Faculty Time. Stylus Publishing. 184pp. https://styluspub.presswarehouse.com/browse/book/9781620362426/Specifications-Grading

-

Supiano, B. 2022. The Unintended Consequences of ‘Ungrading’: Does getting rid of grades make things worse for disadvantaged students? Chronicle of Higher Education, April 29, 2022. https://www.chronicle.com/article/the-unintended-consequences-of-ungrading

Comments

There are no comments on this entry.